Metal³ development environment walkthrough part 2: Deploying a new bare metal cluster

Introduction

This blog post describes how to deploy a bare metal cluster, a virtual one for simplicity, using Metal³/metal3-dev-env. We will briefly discuss the steps involved in setting up the cluster as well as some of the customization available. If you want to know more about the architecture of Metal³, this blogpost can be helpful.

This post builds upon the detailed metal3-dev-env walkthrough blogpost which describes in detail the steps involved in the environment set-up and management cluster configuration. Here we will use that environment to deploy a new Kubernetes cluster using Metal³.

Before we get started, there are a couple of requirements we are expecting to be fulfilled.

Requirements

- Metal³ is already deployed and working, if not please follow the instructions in the previously mentioned detailed metal3-dev-env walkthrough blogpost.

- The appropriate environment variables are setup via shell or in the

config_${user}.shfile, for example- CAPM3_VERSION

- NUM_NODES

- CLUSTER_NAME

Overview of Config and Resource types

In this section, we give a brief overview of the important config files and resources used as part of the bare metal cluster deployment. The following sub-sections show the config files and resources that are created and give a brief description of some of them. This will help you understand the technical details of the cluster deployment. You can also choose to skip this section, visit the next section about provisioning first and then revisit this.

Config Files and Resources Types

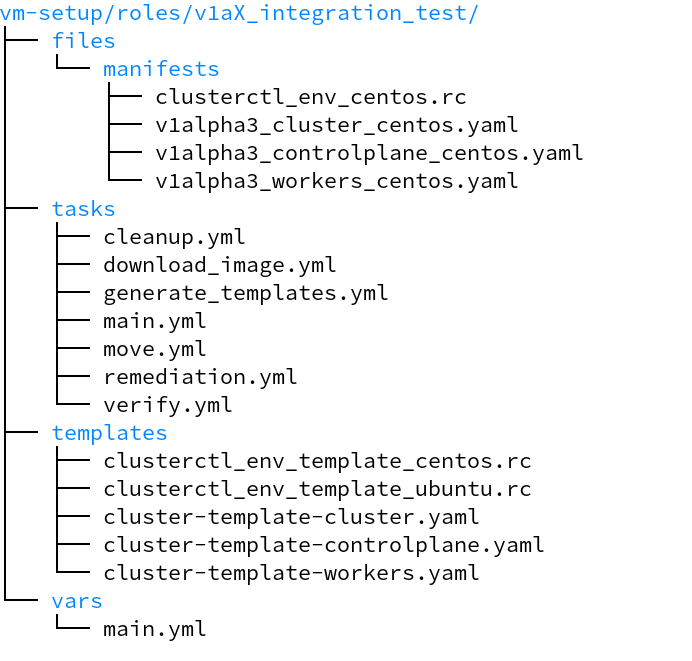

info “Information” Among these the config files are rendered under the path

https://github.com/metal3-io/metal3-dev-env/tree/master/vm-setup/roles/v1aX_integration_test/filesas part of the provisioning process.

A description of some of the files part of provisioning a cluster, in a centos-based environment:

| Name | Description | Path |

|---|---|---|

| provisioning scripts | Scripts to trigger provisioning of cluster, control plane or worker | ${metal3-dev-env}/scripts/provision/ |

| deprovisioning scripts | Scripts to trigger deprovisioning of cluster, control plane or worker | ${metal3-dev-env}/scripts/deprovision/ |

| templates directory | Templates for cluster, control plane, worker definitions | ${metal3-dev-env}/tests/roles/run_tests/templates |

| clusterctl env file | Cluster parameters and details | ${Manifests}/clusterctl_env_centos.rc |

| generate templates | Renders cluster, control plane and worker definitions in the Manifest directory |

${metal3-dev-env}/tests/roles/run_tests/tasks/generate_templates.yml |

| main vars file | Variable file that assigns all the defaults used during deployment | ${metal3-dev-env}/tests/roles/run_tests/vars/main.yml |

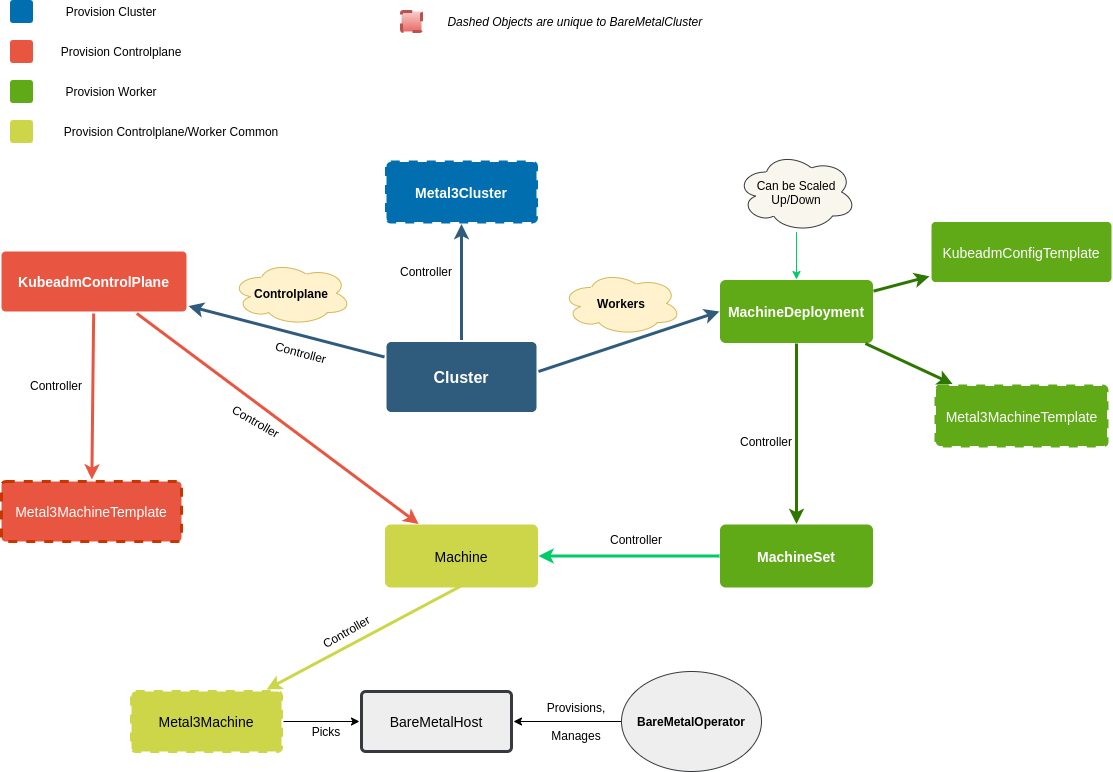

Here are some of the resources that are created as part of provisioning :

| Name | Description |

|---|---|

| Cluster | a Cluster API resource for managing a cluster |

| Metal3Cluster | Corresponding Metal3 resource generated as part of bare metal cluster deployment, and managed by Cluster |

| KubeadmControlPlane | Cluster API resource for managing the control plane, it also manages the Machine object, and has the KubeadmConfig |

| MachineDeployment | Cluster API resource for managing workers via MachineSet object, it can be used to add/remove workers by scaling Up/Down |

| MachineSet | Cluster API resource for managing Machine objects for worker nodes |

| Machine | Cluster API resource for managing nodes - control plane or workers. In case of Controlplane, its directly managed by KubeadmControlPlane, whereas for Workers it’s managed by a MachineSet |

| Metal3Machine | Corresponding Metal3 resource for managing bare metal nodes, it’s managed by a Machine resource |

| Metal3MachineTemplate | Metal3 resource which acts as a template when creating a control plane or a worker node |

| KubeadmConfigTemplate | A template of KubeadmConfig, for Workers, used to generate KubeadmConfig when a new worker node is provisioned |

Note

The corresponding KubeadmConfig is copied to the control

plane/worker at the time of provisioning.

Bare Metal Cluster Deployment

The deployment scripts primarily use ansible and the existing Kubernetes

management cluster (based on minikube ) for deploying the bare-metal

cluster. Make sure that some of the environment variables used for

Metal³ deployment are set, if you didn’t use config_${user}.sh for

setting the environment variables.

| Parameter | Description | Default |

|---|---|---|

| CAPM3_VERSION | Version of Metal3 API | v1alpha3 |

| POD_CIDR | Pod Network CIDR | 192.168.0.0/18 |

| CLUSTER_NAME | Name of bare metal cluster | test1 |

===

Steps Involved

All the scripts for cluster provisioning or de-provisioning are located

at -

${metal3-dev-env}/scripts/.

The scripts call a common playbook which handles all the tasks that are

available.

The steps involved in the process are:

- The script calls an ansible playbook with necessary parameters ( from env variables and defaults )

- The playbook executes the role -,

${metal3-dev-env}/tests/roles/run_tests, which runs the main task_file for provisioning/deprovisioning the cluster, control plane or a worker - There are

templates

in the role, which are used to render configurations in the

Manifestdirectory. These configurations use kubeadm and are supplied to the Kubernetes module of ansible to create the cluster. - During provisioning, first the

clusterctlenv file is generated, then the cluster, control plane and worker definition templates forclusterctlare generated at${HOME}/.cluster-api/overrides/infrastructure-metal3/${CAPM3RELEASE}. - Using the templates generated in the previous step, the definitions

for resources related to cluster, control plane and worker are

rendered using

clusterctl. - Centos or Ubuntu image is downloaded in the next step.

- Finally using the above definitions, which are passed to the

K8smodule in ansible, the corresponding resource( cluster/control plane/worker ) is provisioned. - These same definitions are reused at the time of de-provisioning the

corresponding resource, again using the

K8smodule in ansiblenote “Note” The manifest directory is created when provisioning is triggered for the first time and is subsequently used to store the config files that are rendered for deploying the bare metal cluster.

Provision Cluster

This script, located at the path -

${metal3-dev-env}/scripts/provision/cluster.sh, provisions the cluster

by creating a Metal3Cluster and a Cluster resource.

To see if you have a successful Cluster resource creation( the cluster still doesn’t have a control plane or workers ), just do:

kubectl get Metal3Cluster ${CLUSTER_NAME} -n metal3

This will return the cluster deployed, and you can check the cluster details by describing the returned resource.

Here is what a Cluster resource looks like:

kubectl describe Cluster ${CLUSTER_NAME} -n metal3

apiVersion: cluster.x-k8s.io/v1alpha3

kind: Cluster

metadata: #[....]

spec:

clusterNetwork:

pods:

cidrBlocks:

- 192.168.0.0/18

services:

cidrBlocks:

- 10.96.0.0/12

controlPlaneEndpoint:

host: 192.168.111.249

port: 6443

controlPlaneRef:

apiVersion: controlplane.cluster.x-k8s.io/v1alpha3

kind: KubeadmControlPlane

name: bmetalcluster

namespace: metal3

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha3

kind: Metal3Cluster

name: bmetalcluster

namespace: metal3

status:

infrastructureReady: true

phase: Provisioned

Provision Controlplane

This script, located at the path -

${metal3-dev-env}/scripts/provision/controlplane.sh, provisions the

control plane member of the cluster using the rendered definition of the

control plane explained in the Steps Involved section. The

KubeadmControlPlane creates a Machine which picks up a BareMetalHost

satisfying its requirements as the control plane node, and it is then

provisioned by the Bare Metal Operator. A Metal3MachineTemplate

resource is also created as part of the provisioning process.

Note

It takes some time for the provisioning of the control plane, you can watch the process using some steps shared a bit later

kubectl get KubeadmControlPlane ${CLUSTER_NAME} -n metal3

kubectl describe KubeadmControlPlane ${CLUSTER_NAME} -n metal3

apiVersion: controlplane.cluster.x-k8s.io/v1alpha3

kind: KubeadmControlPlane

metadata: #[....]

ownerReferences:

- apiVersion: cluster.x-k8s.io/v1alpha3

blockOwnerDeletion: true

controller: true

kind: Cluster

name: bmetalcluster

uid: aec0f73b-a068-4992-840d-6330bf943d22

resourceVersion: "44555"

selfLink: /apis/controlplane.cluster.x-k8s.io/v1alpha3/namespaces/metal3/kubeadmcontrolplanes/bmetalcluster

uid: 99487c75-30f1-4765-b895-0b83b0e5402b

spec:

infrastructureTemplate:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha3

kind: Metal3MachineTemplate

name: bmetalcluster-controlplane

namespace: metal3

kubeadmConfigSpec:

files:

- content: #[....]

replicas: 1

version: v1.18.0

status:

replicas: 1

selector: cluster.x-k8s.io/cluster-name=bmetalcluster,cluster.x-k8s.io/control-plane=

unavailableReplicas: 1

updatedReplicas: 1

kubectl get Metal3MachineTemplate ${CLUSTER_NAME}-controlplane -n metal3

To track the progress of provisioning, you can try the following:

kubectl get BareMetalHosts -n metal3 -w

The

BareMetalHostsresource is created whenMetal³/metal3-dev-envwas deployed. It is a kubernetes resource that represents a bare metal Machine, with all its details and configuration, and is managed by theBare Metal Operator. You can also use the short representation instead, i.e.bmh( short forBareMetalHosts) in the command above. You should see all the nodes that were created at the time of metal3 deployment, along with their current status as the provisioning progresses

Note

All the bare metal hosts listed above were created when Metal³ was deployed in the detailed metal3-dev-env walkthrough blogpost.

kubectl get Machine -n metal3 -w

This shows the status of the Machine associated with the control plane and we can watch the status of provisioning under PHASE

Once the provisioning is finished, let’s get the host-ip:

sudo virsh net-dhcp-leases baremetal

Information

baremetal is one of the 2 networks that were created at the time of

Metal3 deployment, the other being “provisioning” which is used - as

you have guessed - for provisioning the bare metal cluster. More

details about networking setup in the metal3-dev-env environment are

described in the - detailed metal3-dev-env walkthrough

blogpost.

You can log in to the control plane node if you want, and can check the deployment status using two methods.

ssh metal3@{control-plane-node-ip}

ssh metal3@192.168.111.249

Provision Workers

The script is located at

${metal3-dev-env-path}/scripts/provision/worker.sh and it provisions a

node to be added as a worker to the bare metal cluster. It selects one

of the remaining nodes and provisions it and adds it to the bare metal

cluster ( which only has a control plane node at this point ). The

resources created for workers are - MachineDeployment which can be

scaled up to add more workers to the cluster and MachineSet which then

creates a Machine managing the node.

Information

Similar to control plane provisioning, worker provisioning also takes some time, and you can watch the process using steps shared a bit later. This will also apply when you scale Up/Down workers at a later point in time.

This is what a MachineDeployment looks like

kubectl describe MachineDeployment ${CLUSTER_NAME} -n metal3

apiVersion: cluster.x-k8s.io/v1alpha3

kind: MachineDeployment

metadata: #[....]

ownerReferences:

- apiVersion: cluster.x-k8s.io/v1alpha3

kind: Cluster

name: bmetalcluster

uid: aec0f73b-a068-4992-840d-6330bf943d22

resourceVersion: "66257"

selfLink: /apis/cluster.x-k8s.io/v1alpha3/namespaces/metal3/machinedeployments/bmetalcluster

uid: f598da43-0afe-44e4-b793-cd5244c13f4e

spec:

clusterName: bmetalcluster

minReadySeconds: 0

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 1

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: bmetalcluster

nodepool: nodepool-0

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: bmetalcluster

nodepool: nodepool-0

spec:

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1alpha3

kind: KubeadmConfigTemplate

name: bmetalcluster-workers

clusterName: bmetalcluster

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha3

kind: Metal3MachineTemplate

name: bmetalcluster-workers

version: v1.18.0

status:

observedGeneration: 1

phase: ScalingUp

replicas: 1

selector: cluster.x-k8s.io/cluster-name=bmetalcluster,nodepool=nodepool-0

unavailableReplicas: 1

updatedReplicas: 1

To check the status we can follow steps similar to Controlplane case:

kubectl get bmh -n metal3 -w

We can see the live status of the node being provisioned. As mentioned before

bmhis the short representation ofBareMetalHosts.

kubectl get Machine -n metal3 -w

This shows the status of Machines associated with workers, apart from the one for Controlplane, and we can watch the status of provisioning under PHASE

sudo virsh net-dhcp-leases baremetal

To get the node’s IP

ssh metal3@{control-plane-node-ip}

kubectl get nodes

To check if it’s added to the cluster

ssh metal3@{node-ip}

If you want to log in to the node

kubectl scale --replicas=3 MachineDeployment ${CLUSTER_NAME} -n metal3

We can add or remove workers to the cluster, and we can scale up the MachineDeployment up or down, in this example we are adding 2 more worker nodes, making the total nodes = 3

Deprovisioning

All of the previous components have corresponding de-provisioning scripts which use config files, in the previously mentioned manifest directory, and use them to clean up the worker, control plane and cluster.

This step will use the already generated cluster/control plane/worker

definition file, and supply it to Kubernetes ansible module to

remove/de-provision the resource. You can find it, under the Manifest

directory, in the Snapshot shared at the beginning of this blogpost

where we show the file structure.

For example, if you wish to de-provision the cluster, you would do:

sh ${metal3-dev-env-path}/scripts/deprovision/worker.sh

sh ${metal3-dev-env-path}/scripts/deprovision/controlplane.sh

sh ${metal3-dev-env-path}/scripts/deprovision/cluster.sh

Note

The reason for running the deprovision/worker.sh and

deprovision/controlplane.sh scripts is that not all objects are

cleared when we just run the deprovision/cluster.sh script.

Following this, if you want to de-provision the control plane it is

recommended to de-provision the cluster itself since we can’t

provision a new control plane with the same cluster. For worker

de-provisioning, we only need to run the worker script.

The following video demonstrates all the steps to provision and de-provision a Kubernetes cluster explained above.

Summary

In this blogpost we saw how to deploy a bare metal cluster once we have a Metal³(metal3-dev-env repo) deployed and by that point we will already have the nodes ready to be used for a bare metal cluster deployment.

In the first section, we show the various configuration files, templates, resource types and their meanings. Then we see the common steps involved in the provisioning process. After that, we see a general overview of how all resources are related and at what point are they created - provision cluster/control plane/worker.

In each of the provisioning sections, we see the steps to monitor the provisioning and how to confirm if it’s successful or not, with brief explanations wherever required. Finally, we see the de-provisioning section which uses the resource definitions generated at the time of provisioning to de-provision cluster, control plane or worker.

Here are a few resources which you might find useful if you want to explore further, some of them have already been shared earlier.